Image Recognition and Processing for Navigation (IRPN)

| Programme: | GSTP | Achieved TRL: | 5 |

| Reference: | G61C-029EC | Closure: | 2018 |

| Contractor(s): | Technical University Dresden (DE, Prime), Airbus Defence& Space (DE), AstosSolutions (DE), Jena-Optronik(DE) | ||

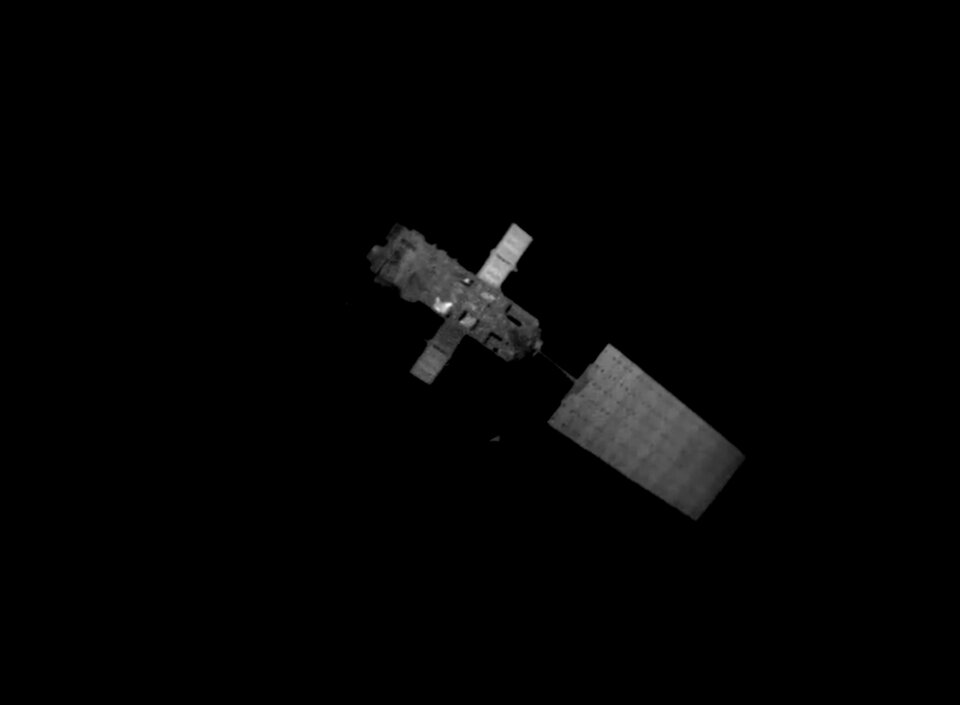

ESA has identified Active Debris Removal as a strategic goal, as it is necessary to stabilise the growth of space debris. This activity covers the gap for the capabilities needed in image processing using cameras in the visual and Infrared range of the spectrum and LIDAR sensors for precise estimation of position, pose and angular motion on uncooperative targets in an Active Debris Removal scenario. Results had to be computed using only individual sensors and in a combined suite, allowing to identify the pros and cons of each of them, with their specific contributions to the overall performance. Characterization of the infrared sensor was key, as it was the evaluation and analysis of the difficulties and workarounds to test it on-ground.

Objectives

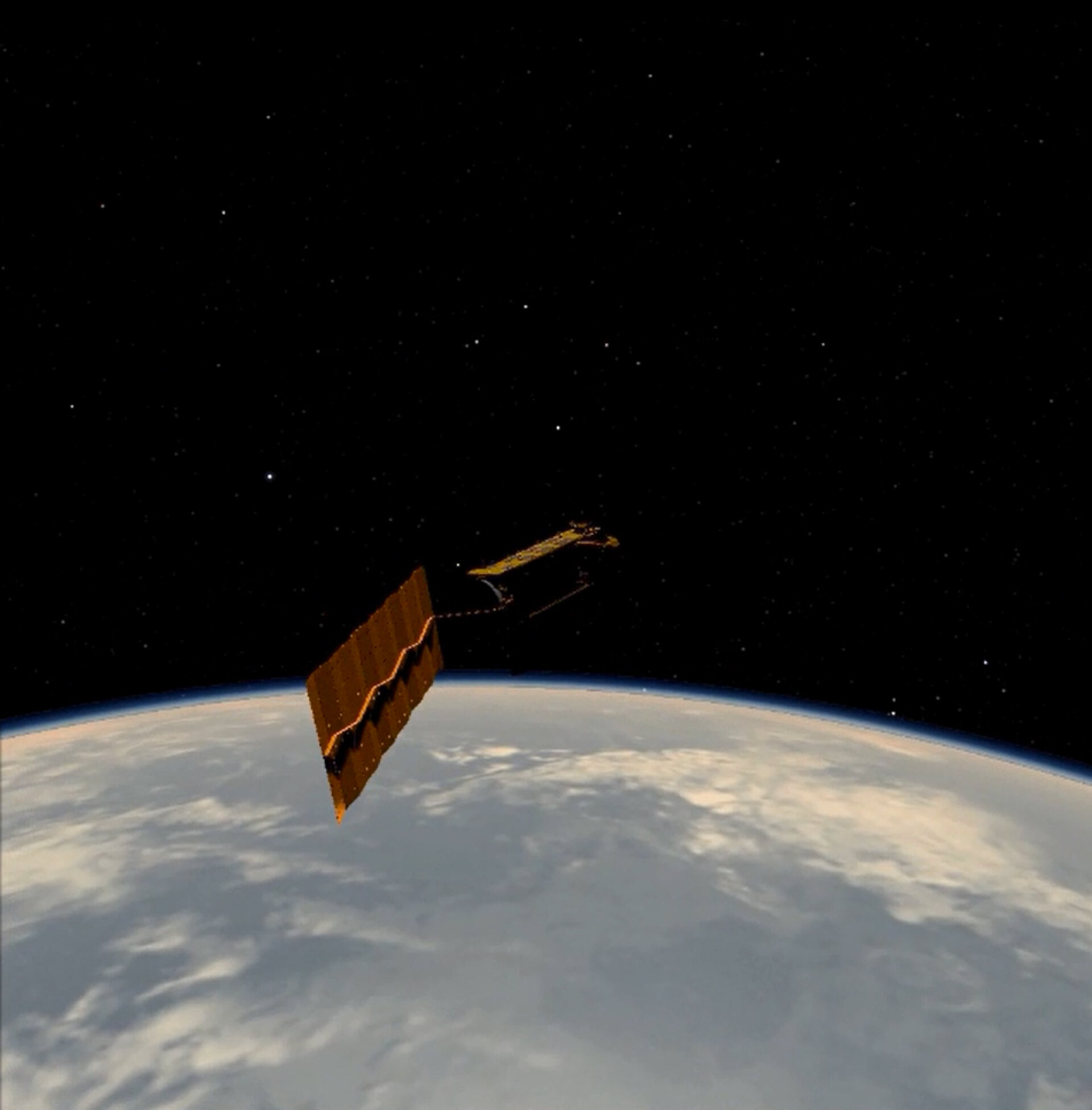

This activity aimed at the design, development and verification of the necessary capabilities in image processing and computation of a navigation solution for position, pose and angular motion estimation on an scenario with uncooperative targets in an Active Debris Removal configuration. A complete set of sequences of synthetic images had to be generated in order to represent different illumination conditions in the approach trajectory of the chaser spacecraft to the target one, providing confidence on the robustness of the navigation solution.

Achievements and status

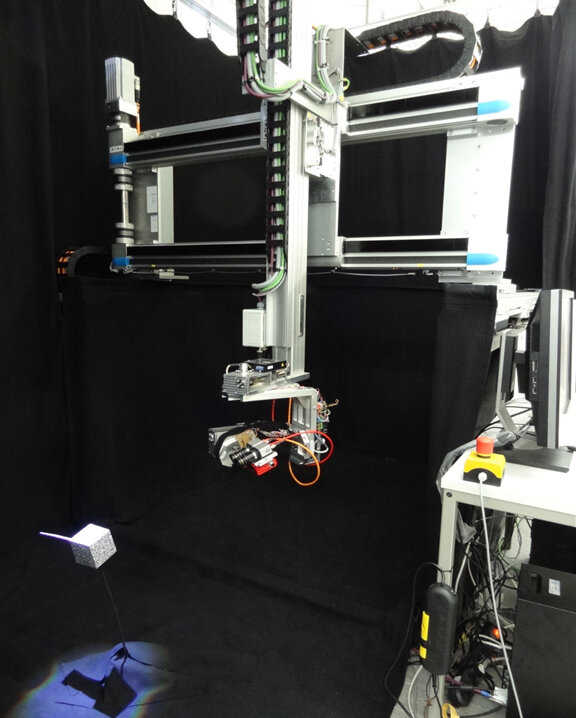

Estimates of relative chaser-target geometrical conditions are provided by specific image recognition and processing (IRP) algorithms and sensor fusion algorithms in a navigation function (NAV) using sensor data from cameras in the visible spectrum (VIS), infrared spectrum (IR)) and light detection and ranging (LIDAR) considering as reference mission the Active Debris Removal of ENVISAT (with relative distances going from 100 m in the far range to 2 m in the close range). All IRP algorithms and the overall IRPN system have been evaluated extensively in different test environments: model-in-the-loop (MIL) and processor-in-the-loop (PIL) with synthetic (rendered) images. VIS and IR algorithms have been tested with real images obtained in a laboratory set-up with a hardware-in-the-loop (HIL) facility using several mock-ups and a robotic facility. The activity has provided very useful lessons on the testing of the algorithms and sensors in an on-ground facility before the actual mission flies. This experience was especially informative in the challenging conditions and limitations for a representative environment in the IR cameras and with the combination of synthetic and real images.

Benefits

The activity has increased the TRL level of the technologies under development using visual and infrared cameras and LIDAR, with many lessons-learnt for the on-ground testing that can be applied in future projects. The advantages of using passive thermal infrared sensors became clear and were demonstrated in orbits with significant eclipse periods because operations can continue in situations where this was not possible with visual sensors only.

Next steps

A continuation of the project aims at implementing a number of meaningful Image Processing (IP) functions in flight representative processors (LEON-4 and FPGA) for real time applications and missions. This includes the definition of a scalable architecture of such a flight configuration and a comprehensive assessment of the needs for the overall migration of the complete system to such a platform.