Astro Drone - A crowdsourcing game to improve vision algorithms

Animals learn from their own visual experiences. Robots are not limited in this respect. They can directly exchange raw camera images or, in order to limit bandwidth, abstract visual features extracted from their images. This exchange could speed-up the visual learning process, while providing robots with a much broader visual experience than in a single-robot scenario.

Appearance-based variation cue

In this project, we investigate whether it is possible to learn the distance to obstacles just by looking at the variation in their appearance. We make this assumption on the basis that there is less variation in textures and colors when a robot approaches a wall or large object.

To measure this variation, the probability distribution of the occurrence of different textures is estimated [1]. Then the Shannon entropy [2] of this distribution is calculated to measure the amount of variation. It is shown that out of a series of 385 image sequences, in 88.6% of the cases the entropy decreases as the camera approaches an obstacle. However, the dataset used to test the cue had some limitations, as most image series data were simulated by scaling a single image. Also, the real sequences were made with handheld cameras and probably had limited variation in video locations. This brings us to one of the goals of the crowdsourcing experiment, which is to gather more varied and larger datasets made with real robots. With such datasets, novel cues like the appearance-based variation can be trained and tested more thoroughly. By letting users all over the world contribute to creating a dataset, we hope to enhance the robustness of monocular distance cues.

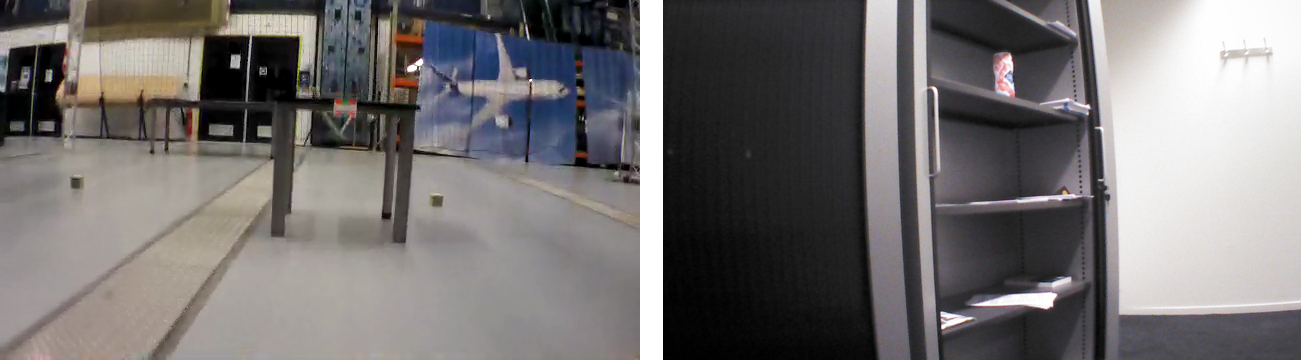

During the game, images will be taken at 10 evenly-spaced distances to the marker. From these images very small 5x5 pixel patches will be extracted when the level is finished. If the player agrees, these image patches are sent to a database, together with the state information of the drone (height, velocities, attitude angles etc). No complete images will be sent, nor can they be reconstructed from the transmitted information. Several examples of drone images accompanied by the extracted patches can be seen in the images below.

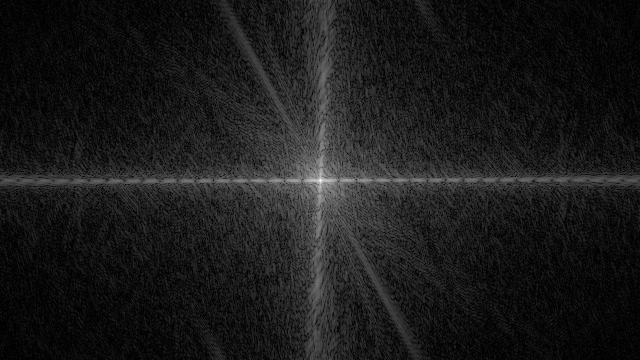

Besides the image patches, the experiment focuses on measuring the variation in the crowdsourced dataset. To get information on the way the scene of the image is structured, the squared magnitude of the Discrete Fourier Transform (DFT) of one image will be sent. Before calculating this power spectrum, the image is converted to gray-scale and a Hanning window is applied. An example of an image taken with the drone, a preprocessed image and the corresponding DFT can be seen below.

The magnitude spectrum cannot be used to restore the original image. The reason is the fact that the Fourier transformed image is composed of complex numbers. To restore the original image from the transformed one, both real and imaginary components are required [3].

As the data extracted in the app only contains the magnitude of the transformed image, insufficient information is available to restore the images. However, the power spectrum does contain information about the geometrical structure of the image [4, 5]. Therefore the power spectrum will aid in analyzing the variety of images captured with 'Astro Drone'.

This project will be conducted in collaboration with the Micro Air Vehicle laboratory of TU Delft and the Artificial Intelligence group in Radboud University Nijmegen.

More information on the AstroDrone app.

References

- Croon, G.C.H.E. de, E. de Weerdt, C. De Wagter, B.D.W. Remes, and R. Ruijsink. 2012. “The Appearance Variation Cue for Obstacle Avoidance.” IEEE Transactions on Robotics 28 (2): 529–34.

- Shannon, Claude Elwood. 2001. “A Mathematical Theory of Communication.” ACM SIGMOBILE Mobile Computing and Communications Review 5 (1): 3–55

- Young, Ian T, Jan J Gerbrands, and Lucas J Van Vliet. 1998. Fundamentals of Image Processing Delft University of Technology Delft

- Torralba, Antonio, and Aude Oliva. 2003. “Statistics of Natural Image Categories.” Network: Computation in Neural Systems 14 (3): 391–412

- Oliva, Aude, Antonio Torralba, Anne Guérin-Dugué, and Jeanny Hérault. 1999. “Global Semantic Classification of Scenes Using Power Spectrum Templates.” In Proceedings of the 1999 International Conference on Challenge of Image Retrieval, 9–9 BCS Learning & Development Ltd.