Continual online learning with spiking neural networks

Background and motivation

The deployment of probes, satellites, rovers and other exploratory equipment into further and further locations of the Solar System is likely to become increasingly common. The further away a probe is from the Sun, the more stringent the energy consumption requirements will be, which is why we are investigating the viability of spiking neural networks (SNNs), as well as neuromorphic hardware that can efficiently run SNN models, for onboard inference.

However, energy is not the only factor in such missions; demand for autonomy is likely to also increase since maintaining an open communication channel for issuing commands and receiving data will become infeasible for equipment operating far from the Earth. In particular, (neural) controllers for exploratory equipment will likely be required to not only operate, but also actively adapt and learn in uncertain environments due to the scarcity (or even complete lack) of data that can be collected in advance for training purposes. Therefore, this project aims to go a step further and explore the feasibility of imbuing SNNs with the ability to adapt and learn continually throughout their deployment.

Project overview

Most biological organisms don't have the luxury of collecting a large database of data and experiences before having to navigate, explore and exploit their environment; they have to learn to survive and thrive on the fly. In contrast, current AI systems are almost exclusively trained on a particular task using a large amount of data collected prior to training, so everything about the data (type, statistical distribution and so on) is known prior to the training phase. Therefore, the ability to continually adapt and learn is perhaps one of the most profound points of distinction between current AI systems and biological organisms.

When comparing the ability of artificial and biological systems to learn on the fly, one of the main beneficial features of biological systems that stands out is adaptation. Various homeostatic processes observed in neurons in all parts of the nervous system act together to ensure that the activity of individual neurons (and the brain as a whole) remains sparse and stable in the presence of fluctuating input [2-9]. Therefore, we look deeper into the neuroscience roots of SNNs and draw inspiration from processes observed in biological neurons, with the aim to replicate homeostatic principles that may enable SNNs to maintain sparse and stable activity while learning continually.

In this project, we will model at the following homeostatic mechanisms observed in biological neurons:

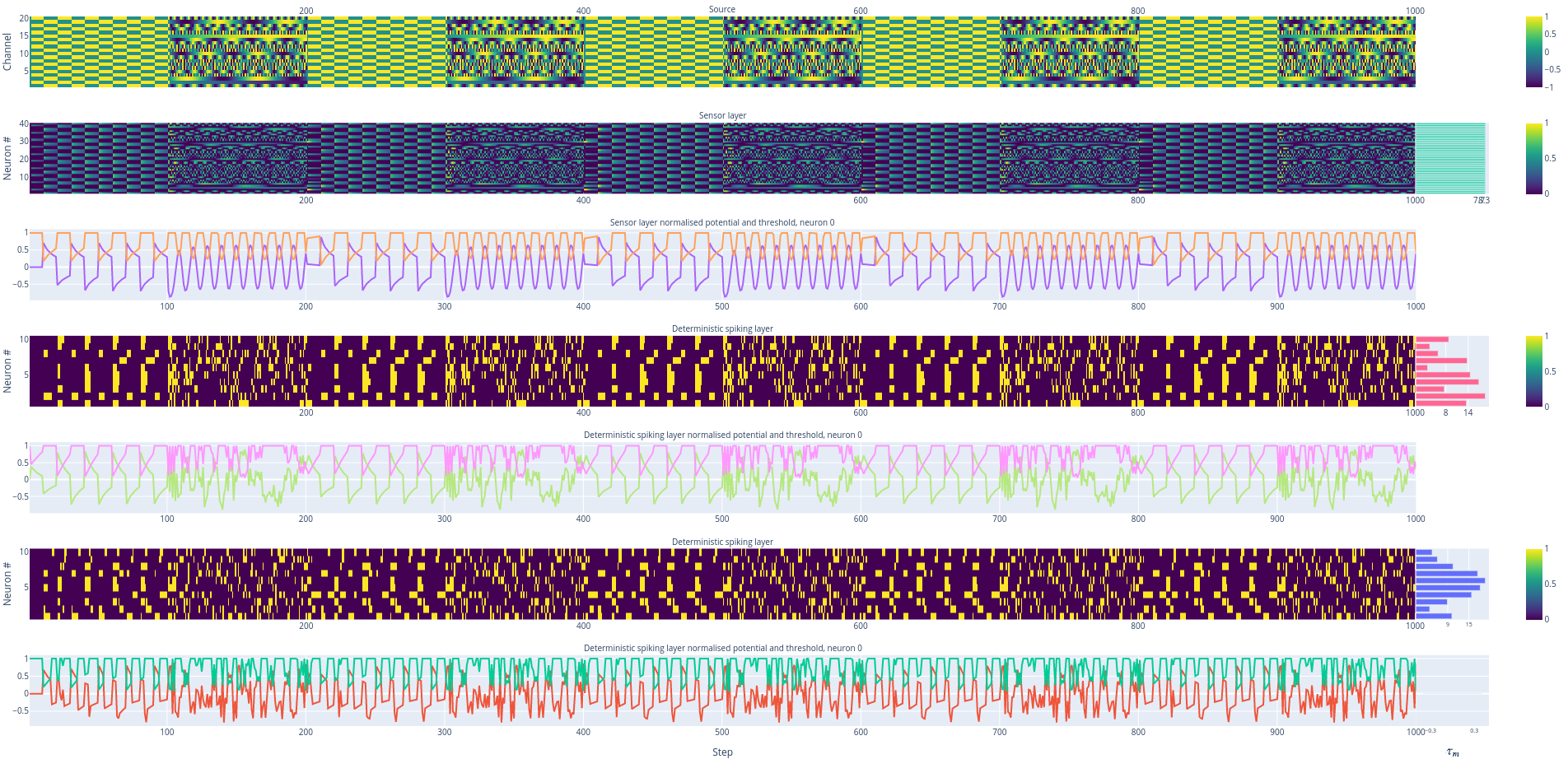

Self-normalisation: A membrane potential update mechanism that reflects the ability of the neuron to adapt to fluctuations in the input.

Synaptic scaling: A homeostatic mechanism that neurons employ in order to handle variability in both the number of synapses and the magnitude of signals that they generate.

Threshold adaptation: A two-factor adaptation mechanism that enables the neuron threshold to adapt to low-frequency fluctuations in the membrane potential (as modelled by the self-normalisation mechanism) while remaining sensitive to high-frequency variations.

The above mechanisms will be used to explore the interplay between adaptation, learning, activity and stability. The project will explore the following main hypotheses:

Adaptation is essential for but independent of learning. In other words, a neural network can adapt without learning; however, adaptation can provide the stability that is necessary for effective learning.

Homeostatic regulation of neural activity can mitigate issues commonly observed in deep neural networks, such as dead neurons and runaway excitation. The latter is especially pronounced in recurrent networks.

Networks of adaptive spiking neurons can maintain sparse yet stable activity patterns in noisy or fluctuating environments or in the presence of concept drift [10].

Sparse activity can directly mitigate the issue of catastrophic forgetting [11] by enforcing sparse weight updates, allowing SNNs to continually learn new behaviour on the fly without forgetting previously learned behaviour.

References

[1] Maass, W. Networks of spiking neurons: the third generation of neural network models. Transactions of The Society for Modeling and Simulation International 14, 1659–1671 (1997).

[2] Barlow, H. B. & Földiák, P. Adaptation and Decorrelation in the Cortex. in The Computing Neuron (eds. Durbin, R., Miall, C. & Mitchison, G.) 54–72 (Addison-Wesley, 1989).

[3] Yedutenko, M., Howlett, M. H. C. & Kamermans, M. High Contrast Allows the Retina to Compute More Than Just Contrast. Frontiers in Cellular Neuroscience 14, (2021).

[4] Turrigiano, G. G. The Self-Tuning Neuron: Synaptic Scaling of Excitatory Synapses. Cell 135, 422–435 (2008).

[5] Gollisch, T. & Meister, M. Eye Smarter than Scientists Believed: Neural Computations in Circuits of the Retina. Neuron 65, 150–164 (2010).

[6] Hartmann, C., Miner, D. C. & Triesch, J. Precise Synaptic Efficacy Alignment Suggests Potentiation Dominated Learning. Front. Neural Circuits 9, (2016).

[7] Fontaine, B., Peña, J. L. & Brette, R. Spike-Threshold Adaptation Predicted by Membrane Potential Dynamics In Vivo. PLoS Comput Biol 10, e1003560 (2014).

[8] Platkiewicz, J. & Brette, R. Impact of Fast Sodium Channel Inactivation on Spike Threshold Dynamics and Synaptic Integration. PLoS Comput Biol 7, e1001129 (2011).

[9] Lubejko, S. T., Fontaine, B., Soueidan, S. E. & MacLeod, K. M. Spike threshold adaptation diversifies neuronal operating modes in the auditory brain stem. Journal of Neurophysiology 122, 2576–2590 (2019).

[10] Gama, J., Žliobaitė, I., Bifet, A., Pechenizkiy, M. & Bouchachia, A. A survey on concept drift adaptation. ACM Comput. Surv. 46, 44:1-44:37 (2014).

[11] Parisi, G. I., Kemker, R., Part, J. L., Kanan, C. & Wermter, S. Continual lifelong learning with neural networks: A review. Neural Networks 113, 54–71 (2019).