Multi-view satellite photogrammetry with Neural Radiance Fields

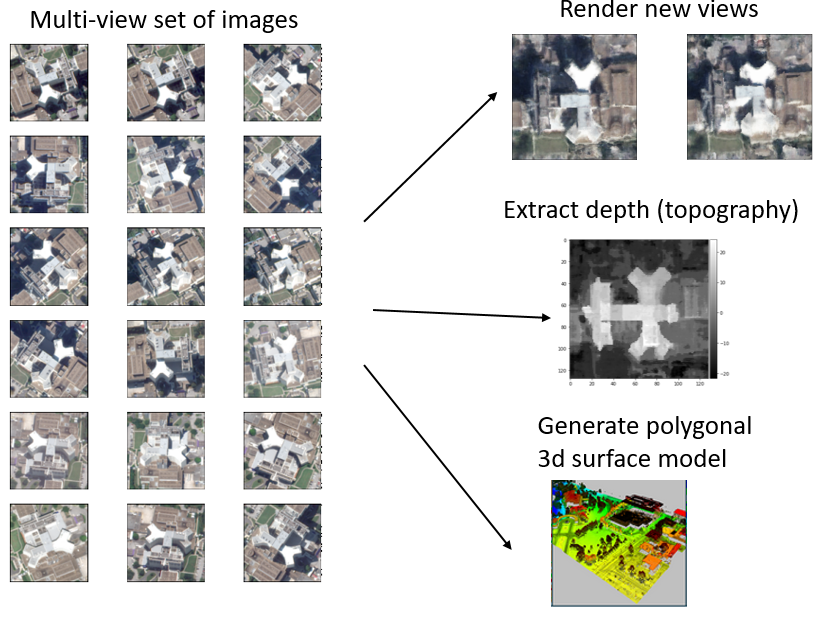

Constructing volumetric (3D) models of the Earth's surface based on Remote Sensing observations has been a long-standing goal for many Earth Observation applications. Optical satellite images can be used to extract 3D information, using the same principle that gives many living beings the ability to perceive depth using (at least) two eyes : photogrammetry, a.k.a. stereo vision. The objective of this project is to create a colored 3D volumetric representation of a scene using a collection of satellite images from different view angles.

Many of the current stereo vision methods fail in real-world applications, particularly when the scenes are captured at very distant moments in time. Effects such as transient objects (clouds, cars, waves), seasonal changes (leaves, snow), and differences in lighting conditions are difficult to model.

This project aims to use new methods from the computer vision field, namely, Neural Radiance Fields [1], in an effort to perform photogrammetry using optical images alone in these challenging conditions.

Project overview

Recent research in computer vision has seen the emergence of Neural Rendering methods, which aim to address this issue through an explicit modeling of certain aspects of the behavior of the underlying system. Neural Radiance Fields [1] model the environment as a continuous function of spatial coordinates (x, y, z) that outputs a 4D vector containing color and opacity (R, G, B, A) using a simple dense Multi-Layer Peceptron network. This allows for a differentiable rendering process that synthesizes views by using discretized ray-marching through the environment. The model is trained through self-supervision by computing a loss between the rendered images and the training images. The authors also showed great potential in modeling surface properties such as specularity by including the view angles as dimensions in the network. Further work, called NeRF in the Wild [2] offers interesting ideas for modeling transient objects and lighting effects through Global Latent Optimization.

References:

- Mildenhall, B., Srinivasan, P. P., Tancik, M., Barron, J. T., Ramamoorthi, R., & Ng, R. (2020). Nerf: Representing scenes as neural radiance fields for view synthesis. arXiv preprint arXiv:2003.08934.

- Ricardo Martin-Brualla, Noha Radwan, Mehdi S. M. Sajjadi, Jonathan T. Barron, Alexey Dosovitskiy, Daniel Duckworth, NeRF in the Wild: Neural Radiance Fields for Unconstrained Photo Collections. arXiv preprint arXiv:2008.02268 2020 Aug 05