Vision manipulation of non-cooperative objects

Future space automation and robotics applications will require vision systems to allow them to perform the necessary precise interactions with the environment. These systems are mandatory for increasing the autonomy of robotic systems in space.

Vision systems will have applications in external robotics (Eurobot), internal robotics (Payload Tutor – PAT – a relocatable payload robot for ISS internal automation), geostationary satellite servicing (RObotic GEostationary orbit Restorer – ROGER), and robotic construction of orbital and planetary infrastructures (ESA Exploration Programme).

ESA’s VIsion MAnipulation of Non-Cooperative Objects (VIMANCO) activity has Eurobot as its primary target application. Eurobot’s purpose is to prepare for and assist with extra-vehicular activity (EVA) on the ISS.

A typical operational scenario for Eurobot is the placing of adjustable portable foot restraints at multiple locations on the ISS for use by astronauts during EVA. This involves ‘walking’ along the handrails and inserting the restraints into a fixture known as a worksite interface.

The European Robotic Arm (ERA) is already able to perform insertion tasks using vision. However, it requires a specific visual target to process the position of the objects that must be grasped.

For Eurobot, it will not be possible to place a target on every object to be grasped. The vision system therefore has to be able to cope with non-cooperative objects, that is, objects that are not equipped with optical markers.

VIMANCO objectives

The objectives of the VIMANCO activity are to:

- define a vision system architecture for Eurobot, taking into account the Eurobot working environment and the application of vision techniques to Eurobot operations

- implement a vision software library to allow vision control for space robots

- build a prototype of the necessary hardware and software and demonstrate the combined system on the ESTEC Eurobot test bed

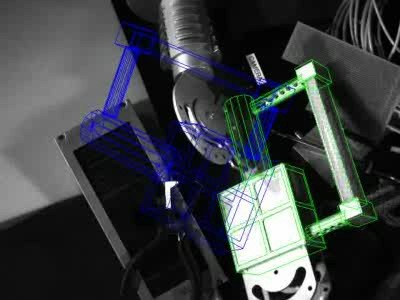

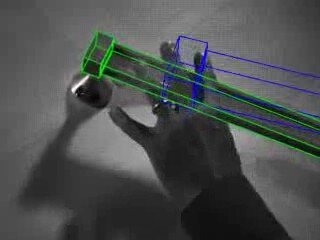

To meet the objectives of a vision control system, several steps must be performed. First, the object of interest has to be recognised in the image acquired by the camera or cameras. This recognition step must also provide a coarse determination of the location of the object relative to the camera. Since the recognition process is usually time consuming and the object position data it supplies is insufficiently accurate to be used for controlling the robot, a tracking process is employed. Once the object is known, it can be tracked from frame to frame, at the video rate, using three-dimensional, model-based tracking algorithms. These algorithms can be employed with one camera or with short or long baseline stereoscopy.

VIMANCO system components

The VIMANCO system comprises the following components:

- vision system – a stereo camera pair and two independent cameras, all with illumination systems

- vision system simulator – a 3D graphic tool used to reconstruct the robotic system and its environment to produce virtual images

- vision processing and object recognition library – implements the functionality required for object recognition, object tracking and visual servoing

- automation and robotics simulator – replaces the robot controller functionality that is needed to validate and demonstrate the system

- control station – provides the human-machine interface to allow the operator to control the VIMANCO simulations